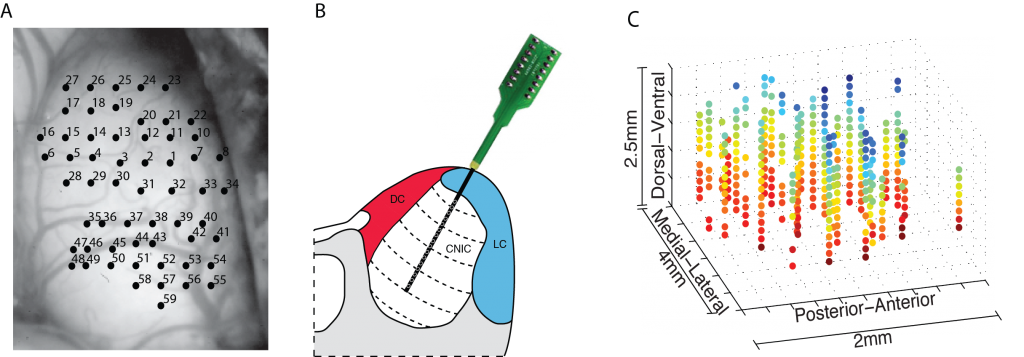

We study how sounds are encoded in the auditory midbrain and cortex, two critical neural structures in the mammalian auditory system. These two auditory structures perform key computations for acoustic feature extraction and are essential for sound recognition. We use large-scale neural recording arrays (Neuronexus), as depicted above (Rodriguez et al 2010), to simultaneously record brain activity from multiple neurons and recording locations. This allows us to measure how sounds are decomposed into elementary acoustic features and how the underlying neural organization contributes to sound recognition behaviors.

Decoding Brain Activity

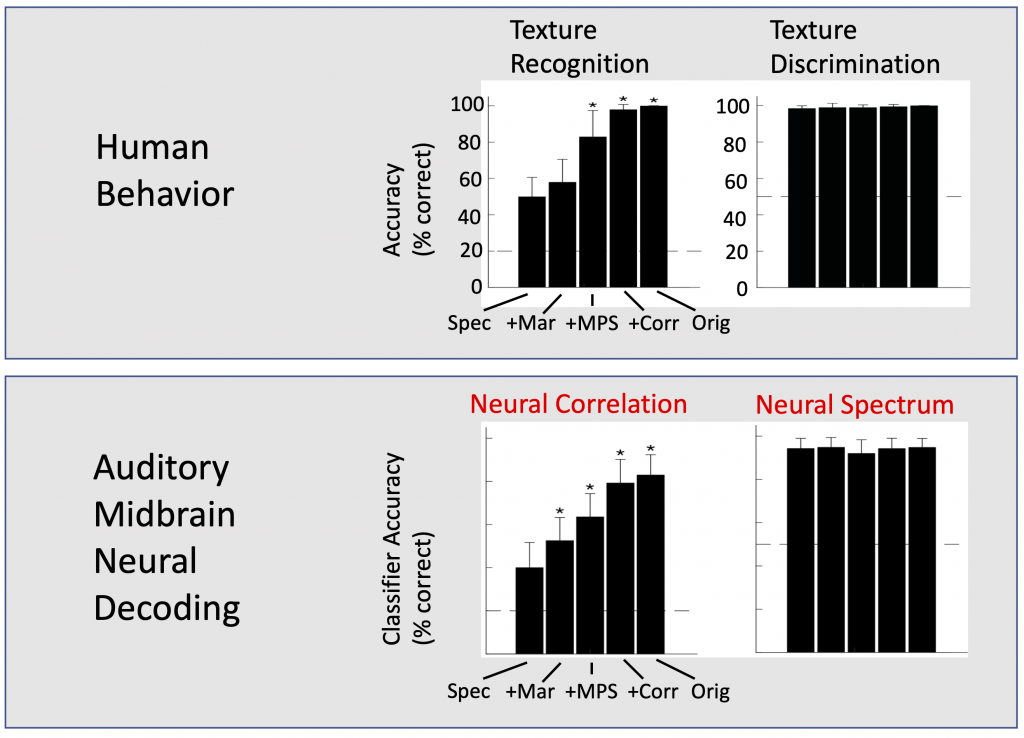

We are also interested in understanding the encoding strategies and mechanisms employed by the brain for sound recognition, categorization and discrimination phenomena. We have recently used a variety of neural classifiers (Osman et al 2018; Sadeghi et al 2019; Zhai et al 2020) to decode brain activity and predict auditory behaviors and perception as shown below (e.g., Zhai et al 2020; Sadgehi et. al 2019) for a sound texture recognition and discrimination task. Decoded neural population activity from the principle auditory midbrain nucleus (inferior colliculus) accurately predicts human behavioral results.

Neurotechnologies

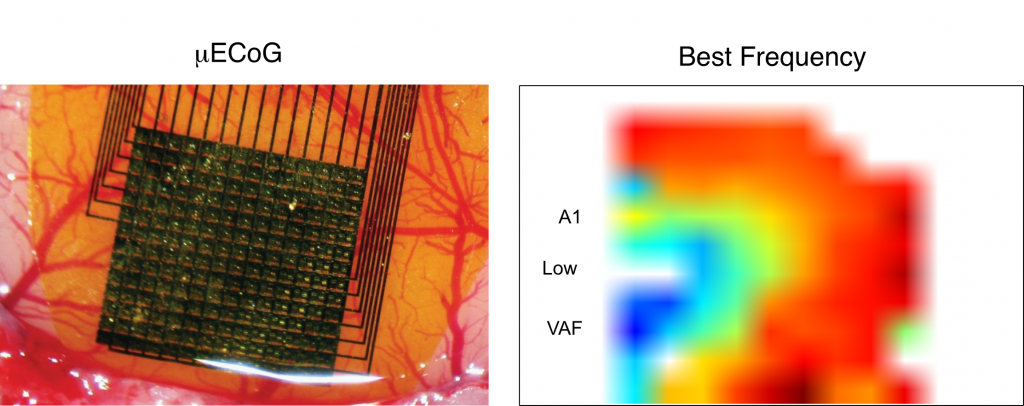

The lab is also interested in developing and refining technologies that can be used to perform and analyze large scale brain recordings. Below is an example using 192 channel recording array that our group and collaborators used to study auditory cortex organization in rat (Escabi et al. 2014).

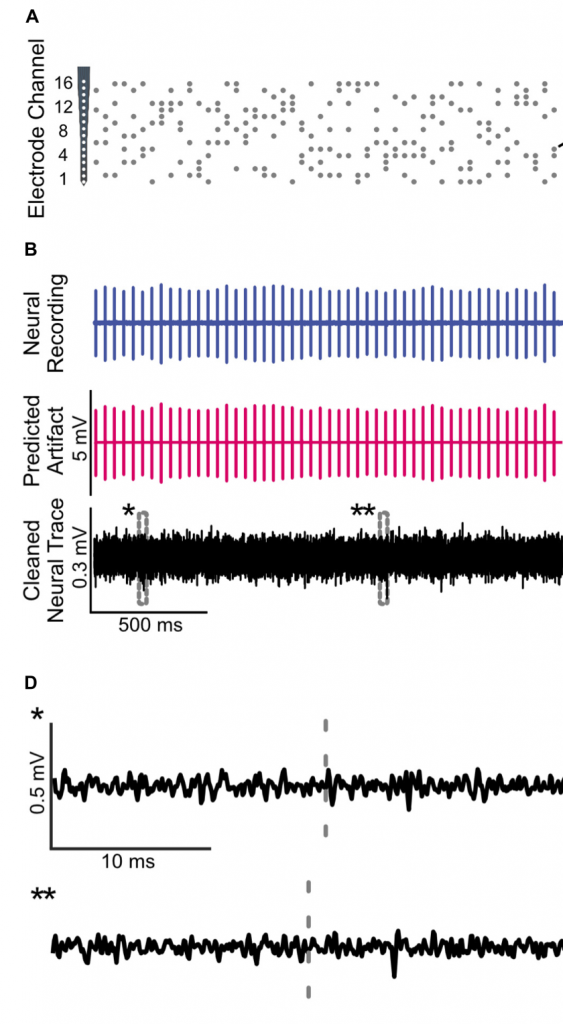

Another recent example is the development of technologies for electrically stimulating the brain. We developed a multi-channel artifact removal algorithm (Sadeghi et al 2020) that can be used to study brain transformations and which potential applications for brain machine interfaces and prosthetic devices.