Complementary to the electrophysiology, neural coding, and modeling work in the lab, we also carry out human perception studies to understand perceptual capabilities. We are particularly interested in understand how natural sounds are perceived by humans and the neural coding mechanisms that contribute to perception. By combining physiology, modeling and human psychoacoustics we hope to develop more comprehensive theories of how natural sounds are represented and ultimately perceived by the brain.

Binaural Illusory Ripples

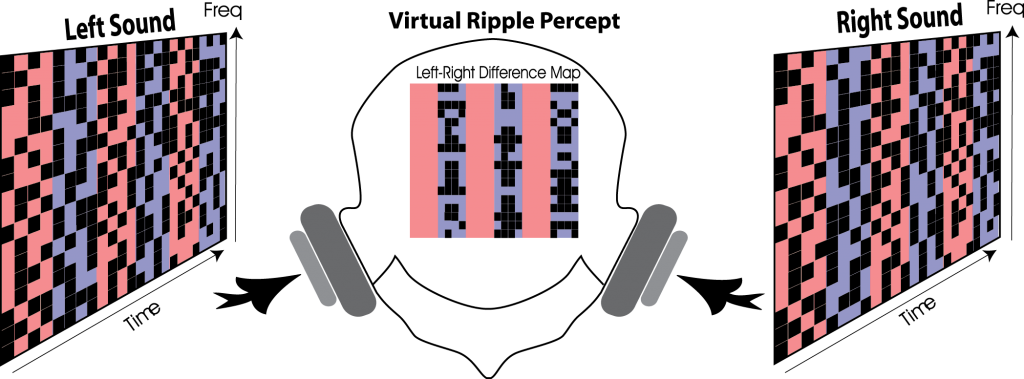

Binaural cues (intramural time and level differences) are well known to contribute to sound localization and segregation phenomena. We have developed a binaural random chord-steregram (Nassiri & Escabi 2008) sound which creates the illusion of dynamically varying sounds. These sounds are conceptually analogous to the random dot stereograms originally used to study binocular fusion by Béla Julesz (https://en.wikipedia.org/wiki/Béla_Julesz) and which creates a spatial image percept despite lacking spatial cues. Human listeners are able to group dynamic spectro-temporal correlations between the two ears which produces the perception of illusory moving ripple sounds.

Perception of Spectro-Temporal Cues by Normal Hearing and Cochlear Implant

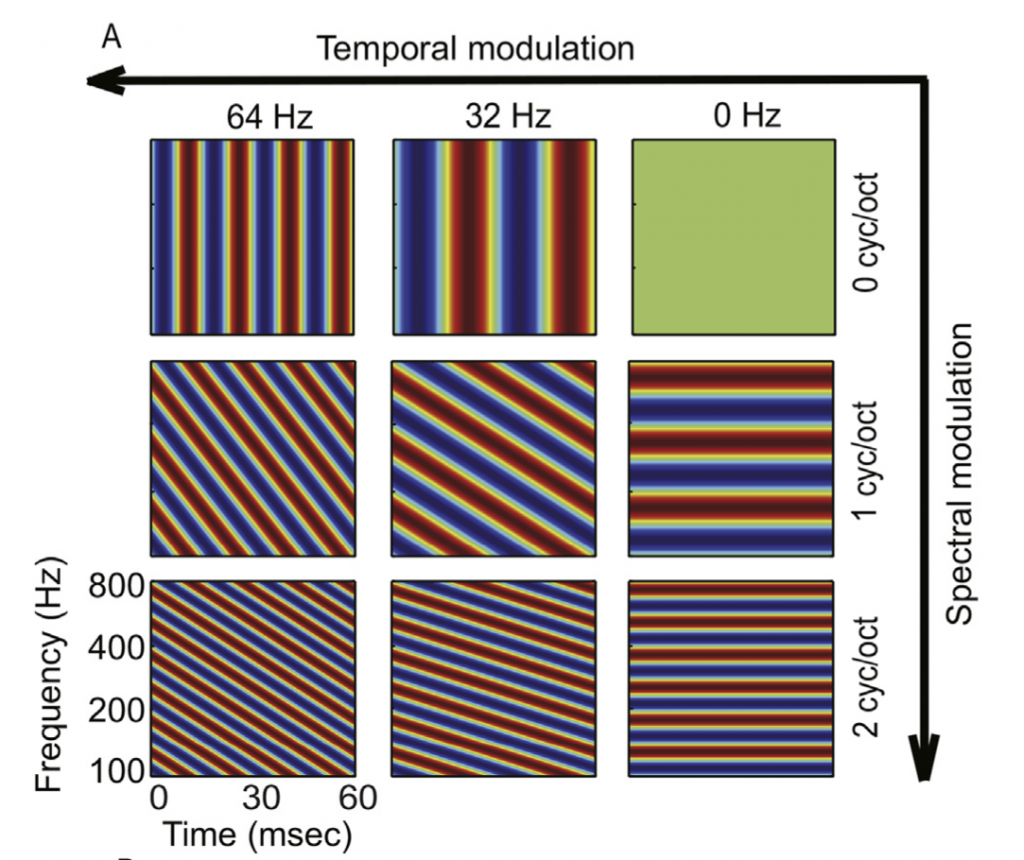

Along with collaborators Litovsky and Zheng (Zheng et al 2017) we characterized spectro-temporal sensitivities of cochlear implant patients. Spectrograms of the Moving ripple sounds used to study spectro-temporal sensitivities are shown below.

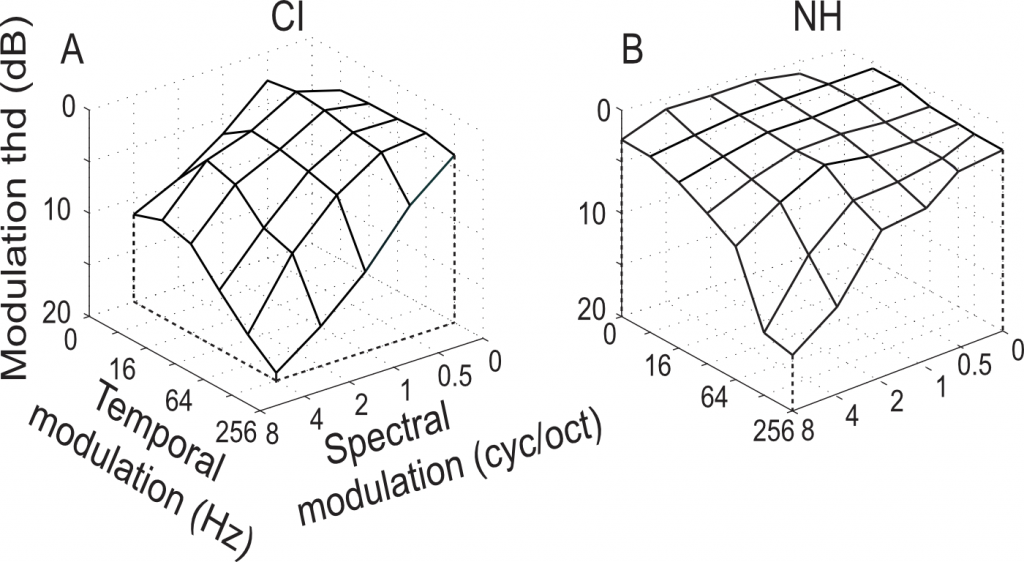

The perceptual transfer function of cochlear implant and normal hearing listeners (below) show that cochlear implant listeners have poorer spectral resolution than normal hearing subjects, consistent with prior studies. However, cochlear implant listeners gain benefits for perceiving spectro-temporal cues jointly over pure spectral or temporal cues. A similar benefit is not observed for normal hearing listeners.

Speech Recognition In Noise

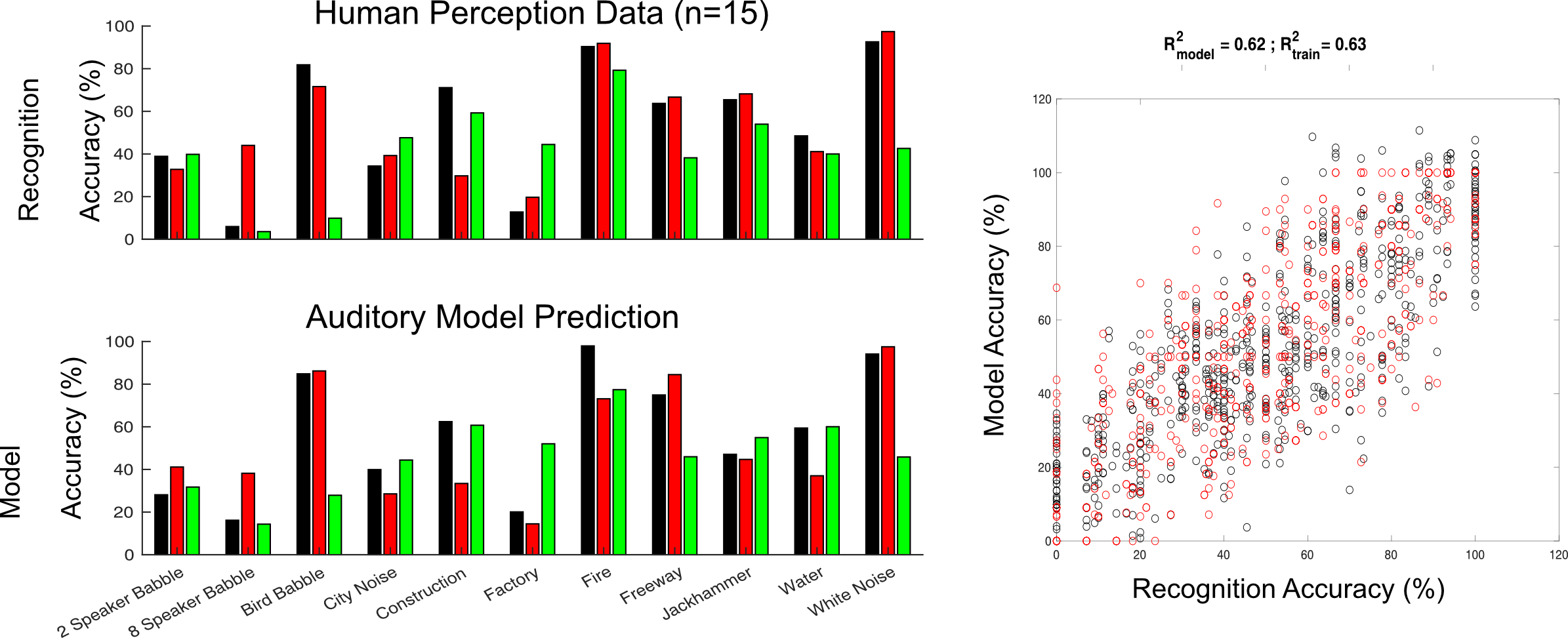

We are currently studying sound recognition and discrimination phenomena in the presence of natural sources of background noise. Natural background sounds are structurally complex and each background sound interferes with speech in unique ways (as shown below for human listeners). Some background sounds interfere extensively with speech perception while others have a more modest effect. We are trying to identify the acoustic cues that contribute to noise interference and differences in speech comprehension. Using models, we are also trying to identify the neural coding mechanisms that account for and are able to predict perceptual findings. The below figures shows human word recognition accuracy for different masking noises along with predicted results using a biologically inspired model.

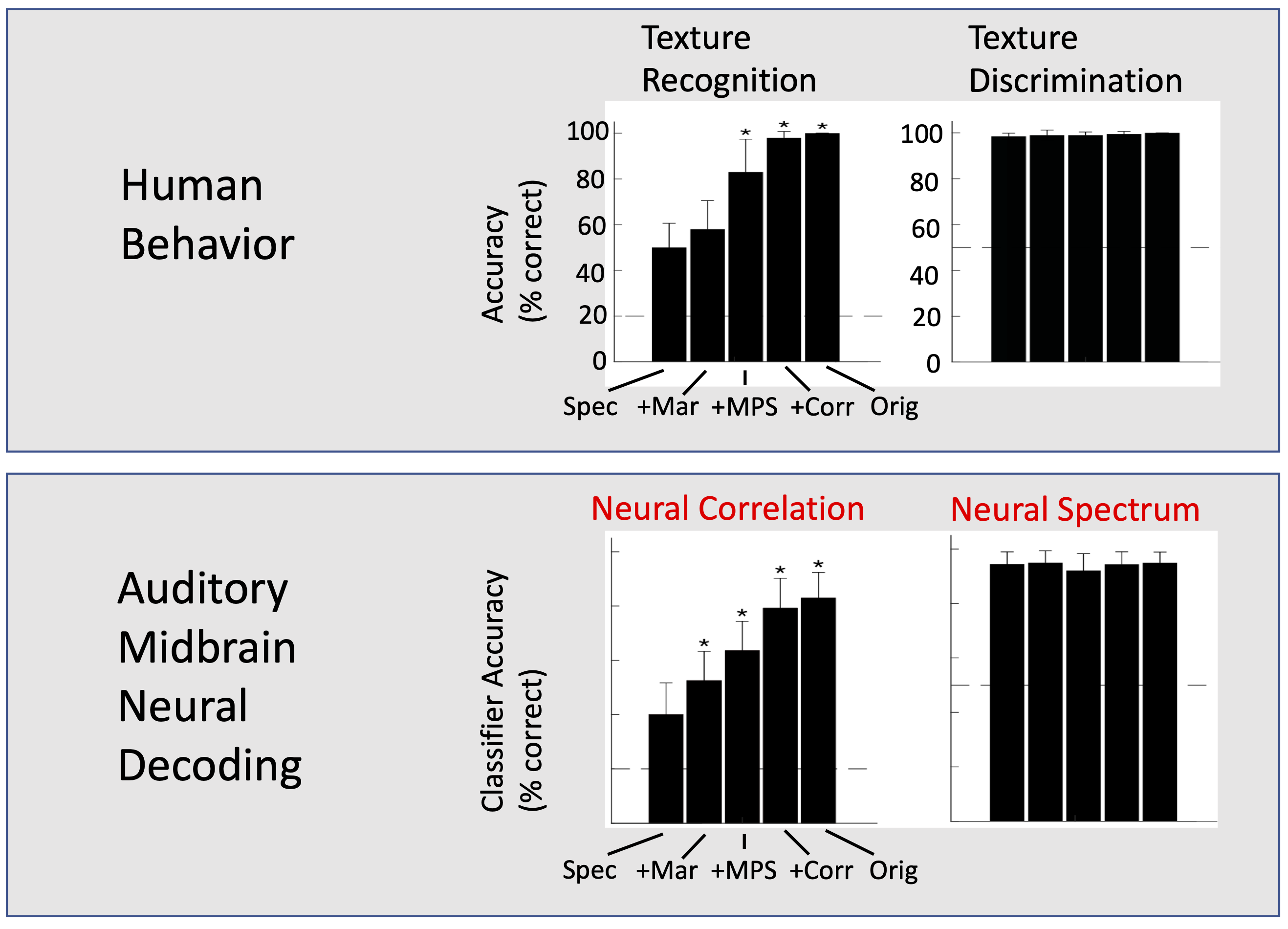

Texture Perception

Alongside our electrophysiological studies in auditory midbrain, we are also exploring how texture sounds are perceived and recognized. Texture sounds (McDermott & Simoncelli 2013) are random stationary sounds that are well represented by summary statistics. Unlike speech or vocalizations, these sounds vary randomly over time and do not have a fixed and set of spectro-temporal features that define them. For instance, the word “hello” will always have a relatively well defined phonetic structure and spectrogram with repeatable features that occur in a relatively stable fashion over time. Running water sounds, by comparison, are completely random over time. We have been exploring the hypothesis that the critical features that allow for recognition and coding of these sounds is statistics from the sound and ultimately statistics of the neural activity. Below are results showing human perceptual results for texture recognition and discrimination along with results obtained by decoding neural activity, which show a nearly identical trend (Zhai et al 2020).